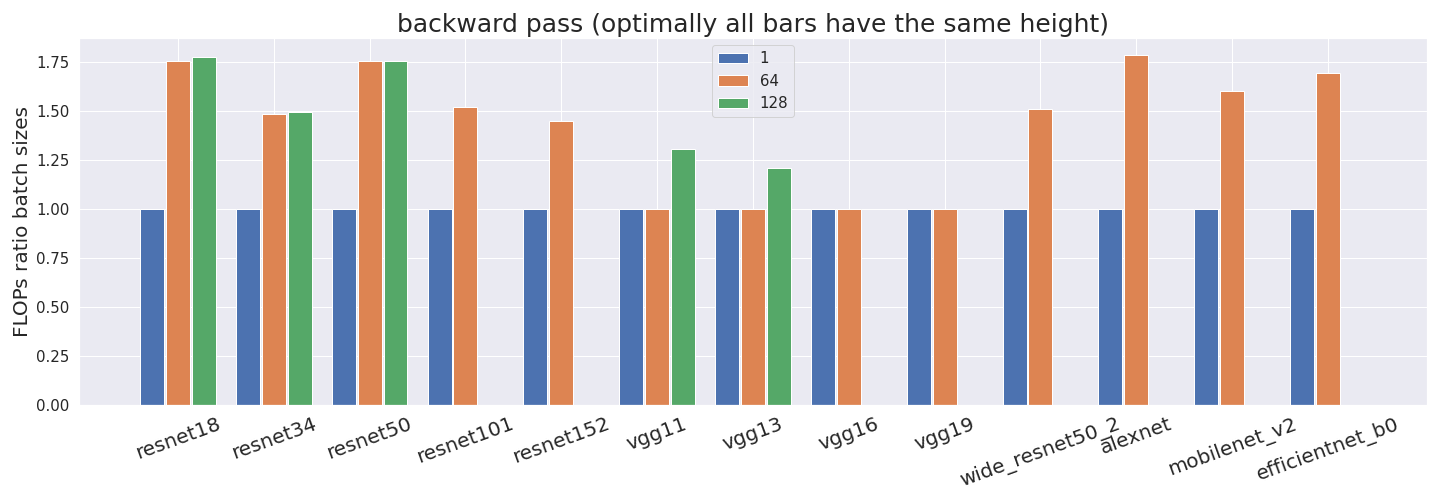

How to Measure FLOP/s for Neural Networks Empirically? – Epoch

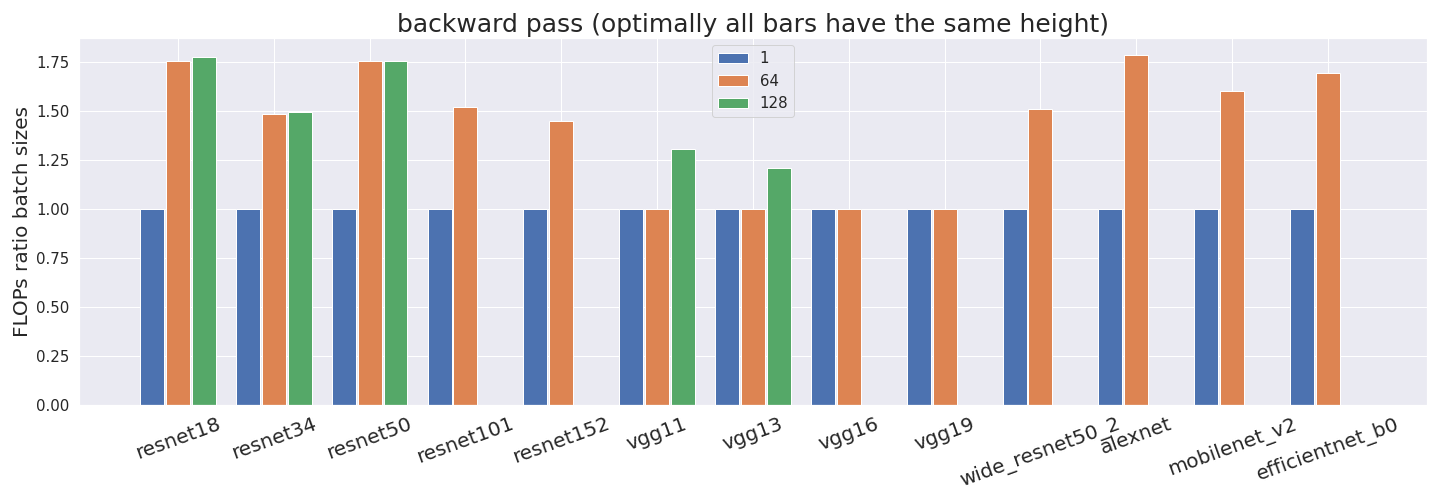

Computing the utilization rate for multiple Neural Network architectures.

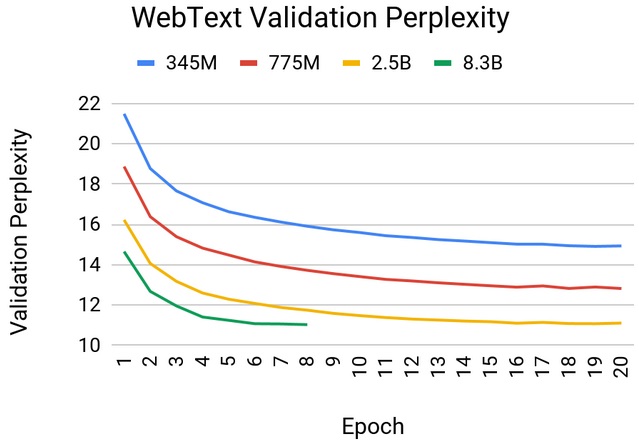

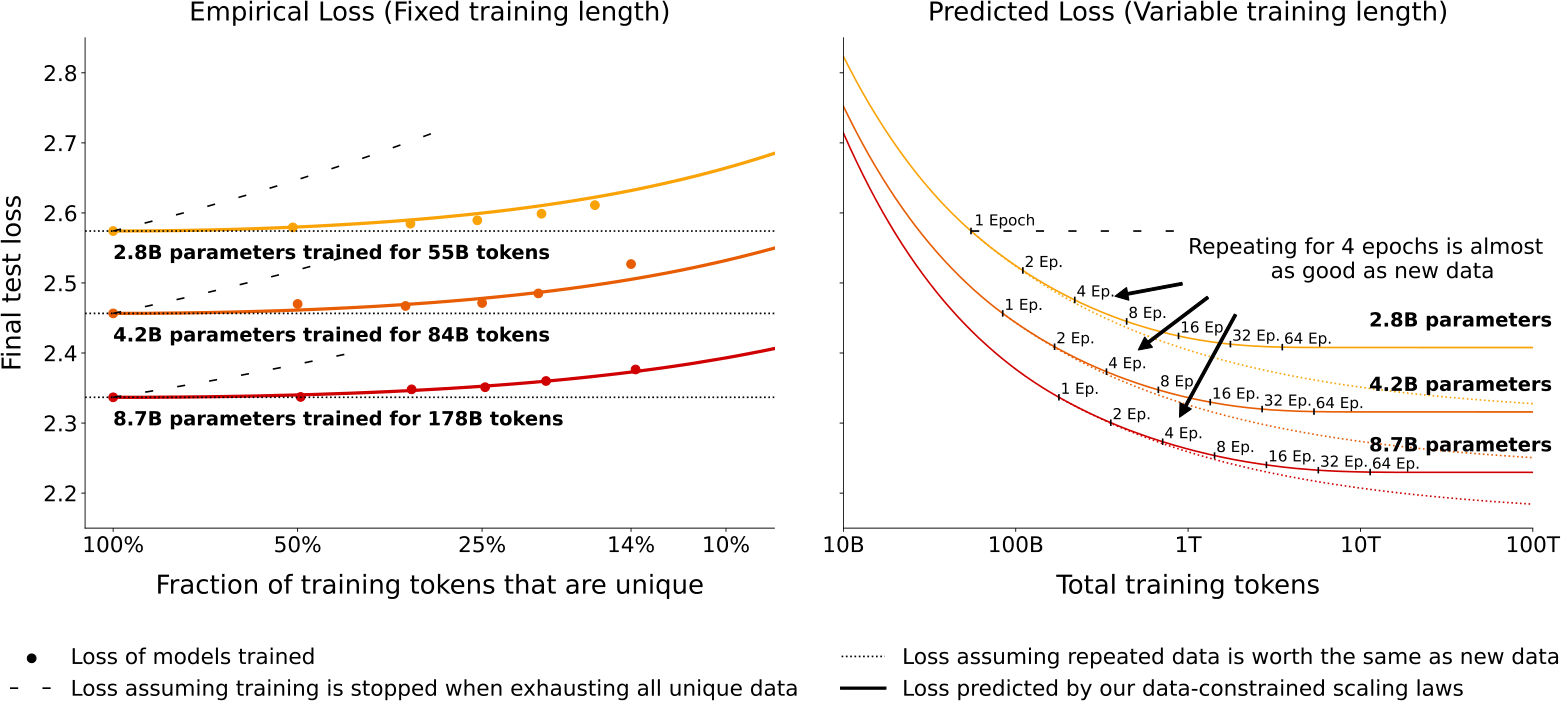

Scaling Laws for AI And Some Implications

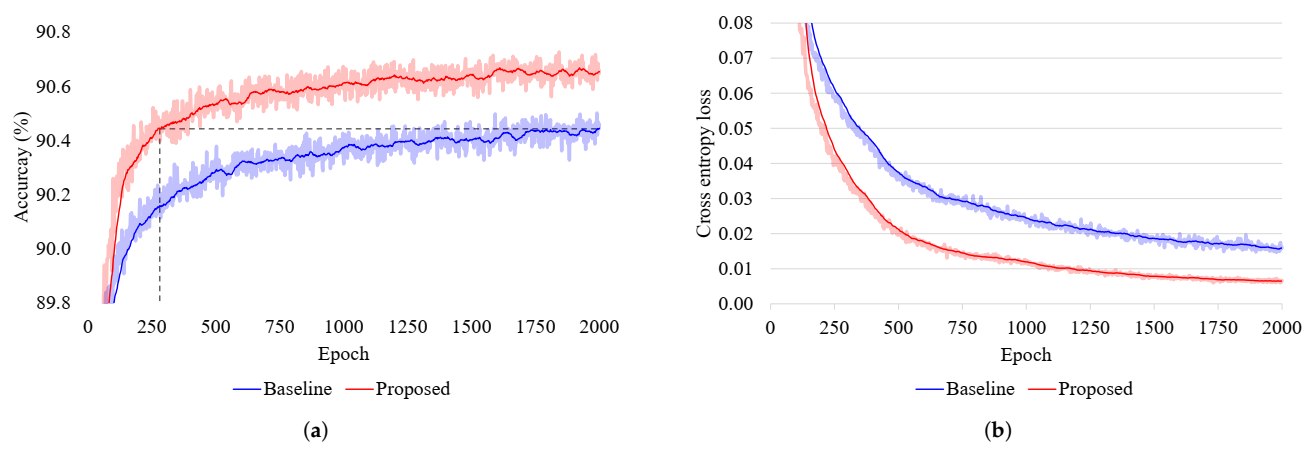

Empirical evaluation of filter pruning methods for acceleration of convolutional neural network

SiaLog: detecting anomalies in software execution logs using the siamese network

NVIDIA Clocks World's Fastest BERT Training Time and Largest Transformer Based Model, Paving Path For Advanced Conversational AI

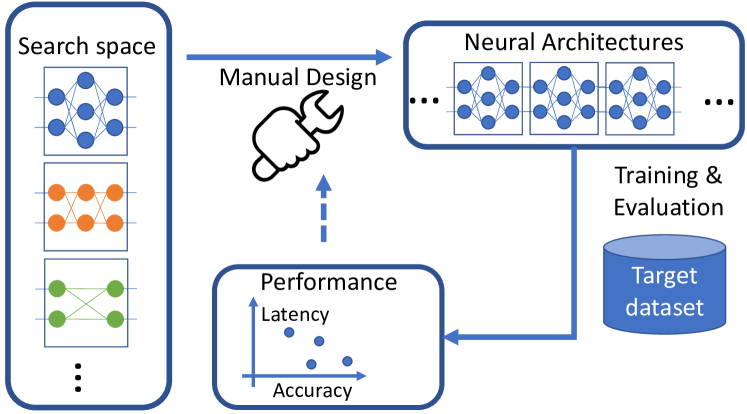

1812.03443] FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search

NeurIPS 2023

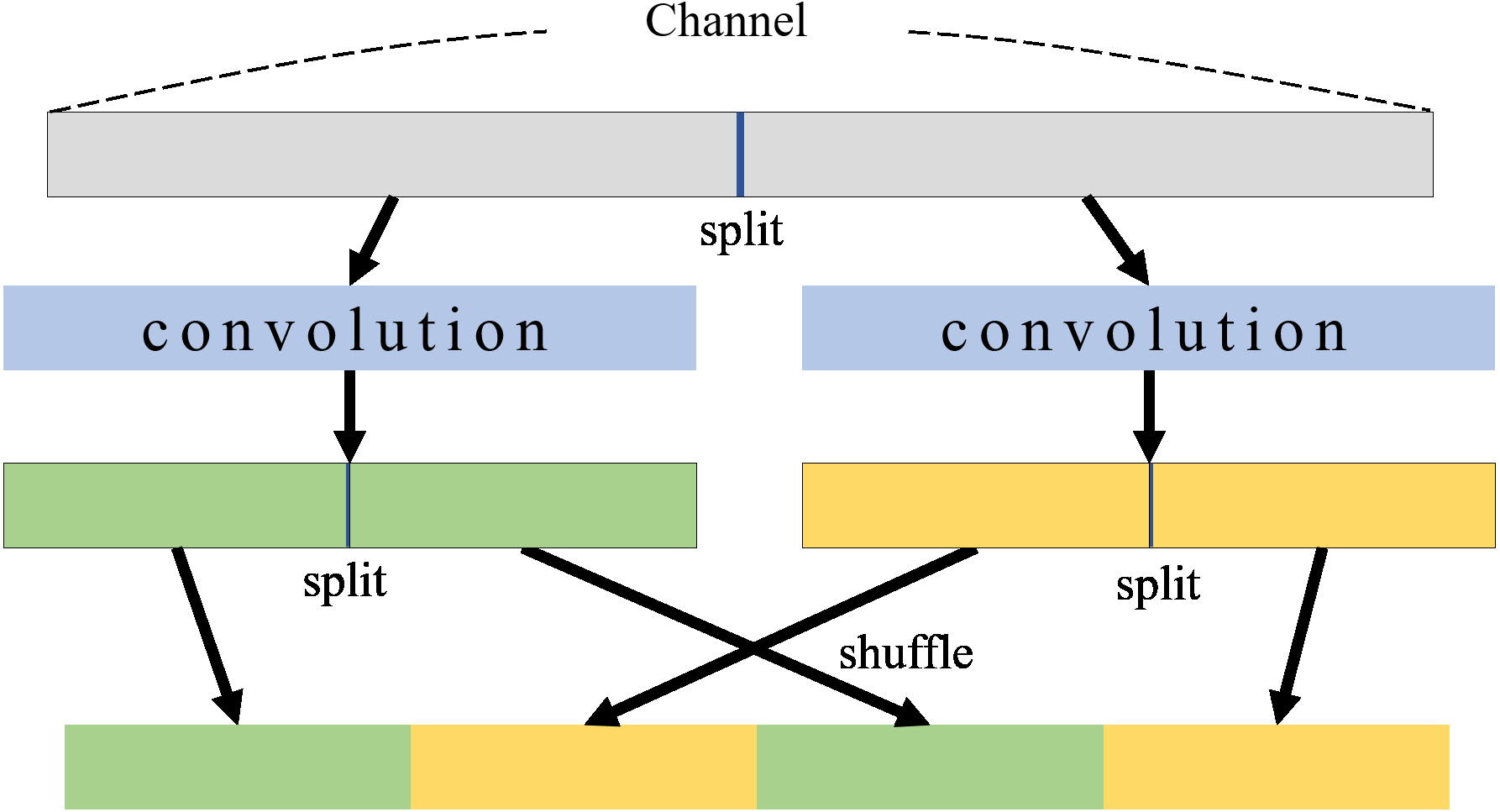

PresB-Net: parametric binarized neural network with learnable activations and shuffled grouped convolution [PeerJ]

Mutual information estimates as function of the layer index, for the

FLOPS Calculation [D] : r/MachineLearning

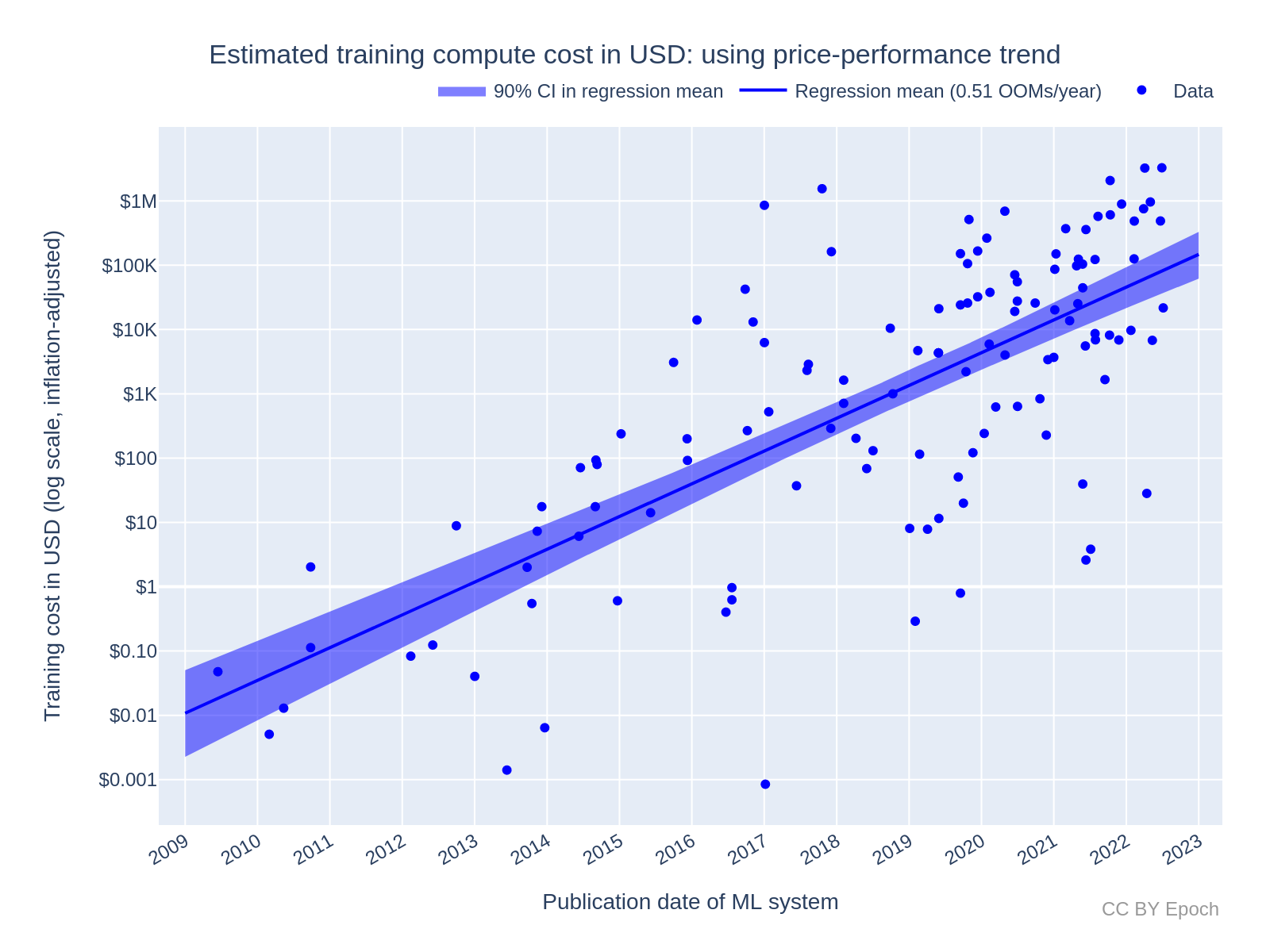

Trends in the Dollar Training Cost of Machine Learning Systems – Epoch

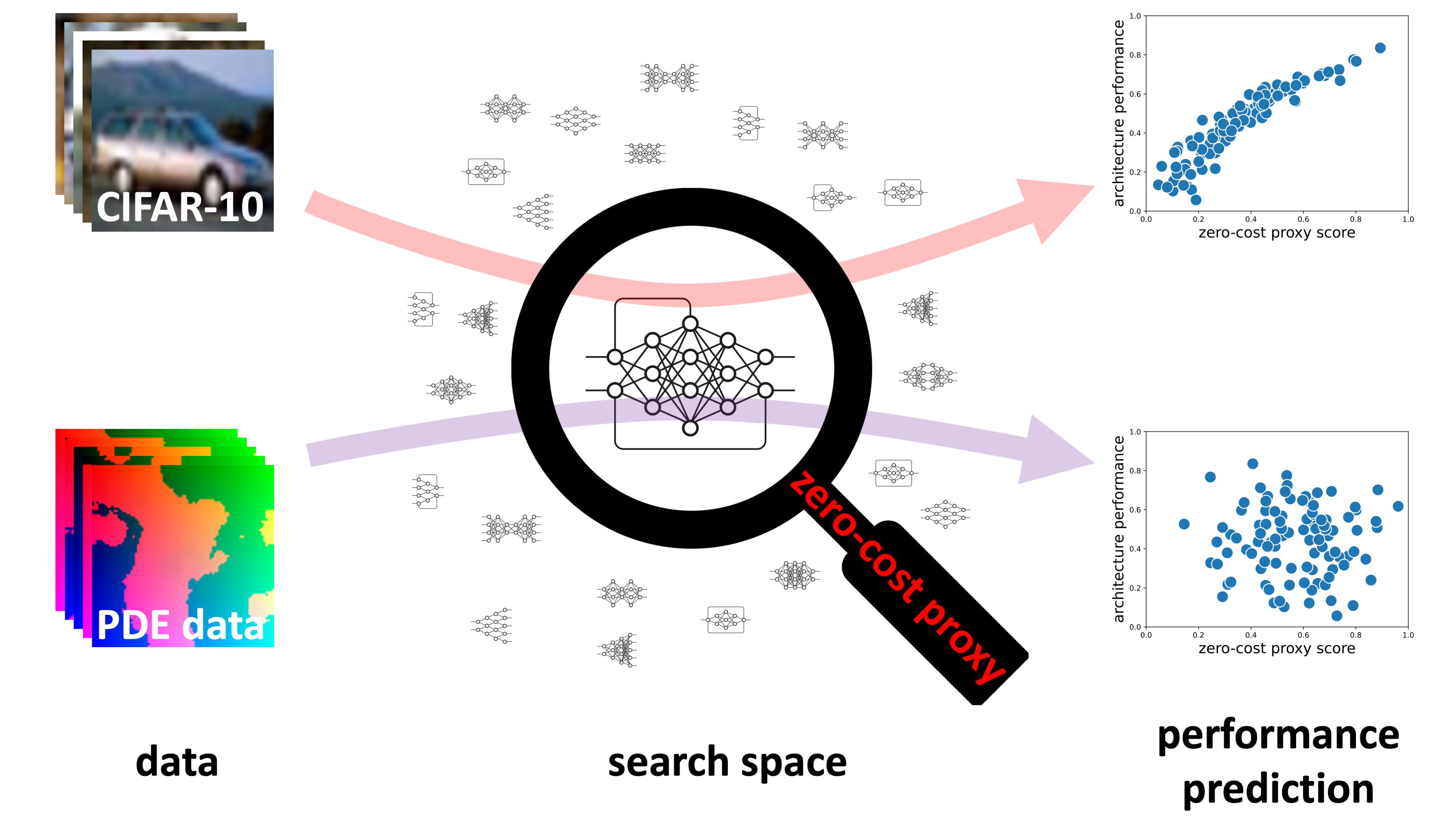

A Deeper Look at Zero-Cost Proxies for Lightweight NAS · The ICLR Blog Track

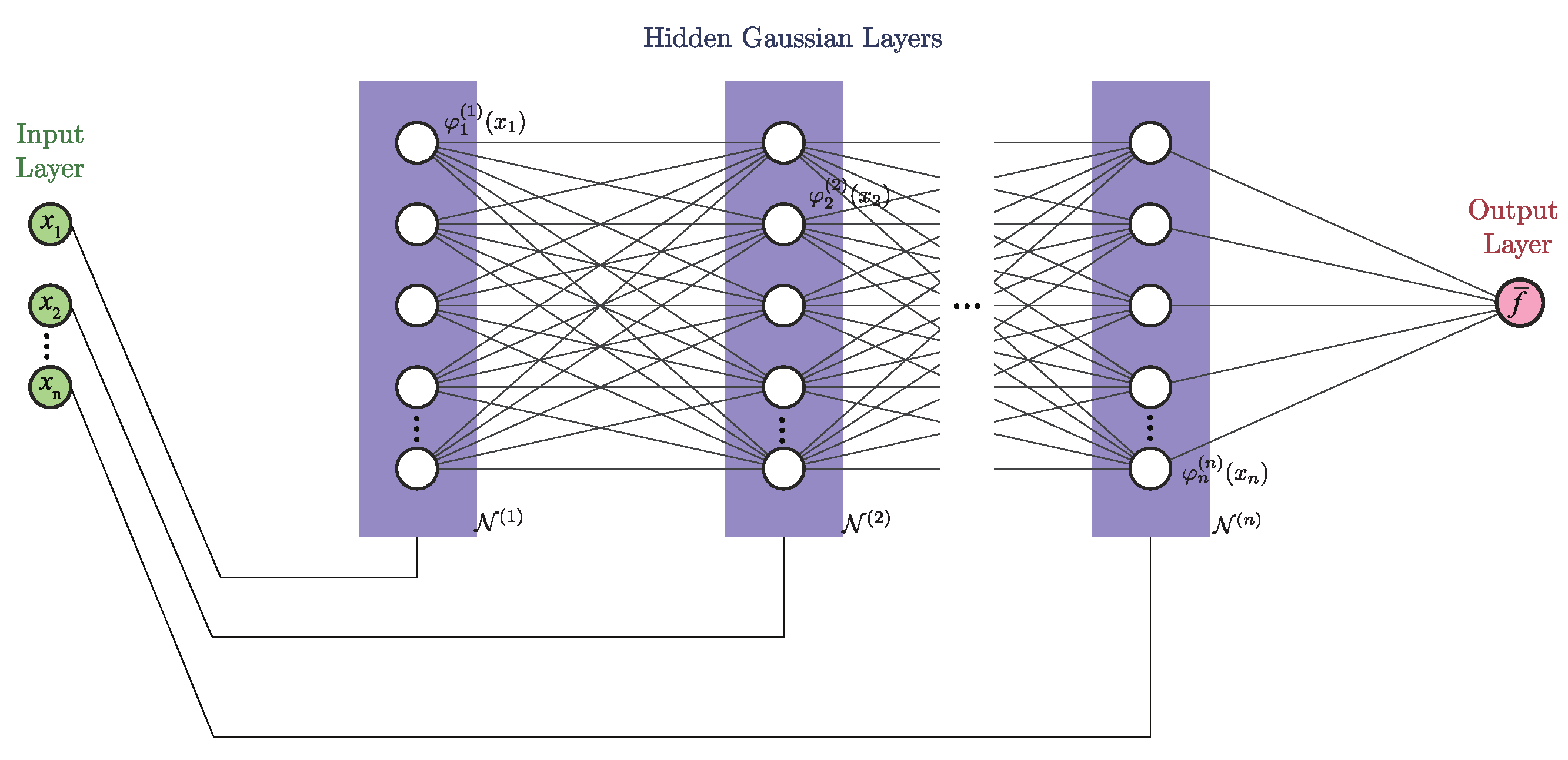

Algorithms, Free Full-Text

How to measure FLOP/s for Neural Networks empirically? — LessWrong

Applied Sciences, Free Full-Text

Differentiable neural architecture learning for efficient neural networks - ScienceDirect