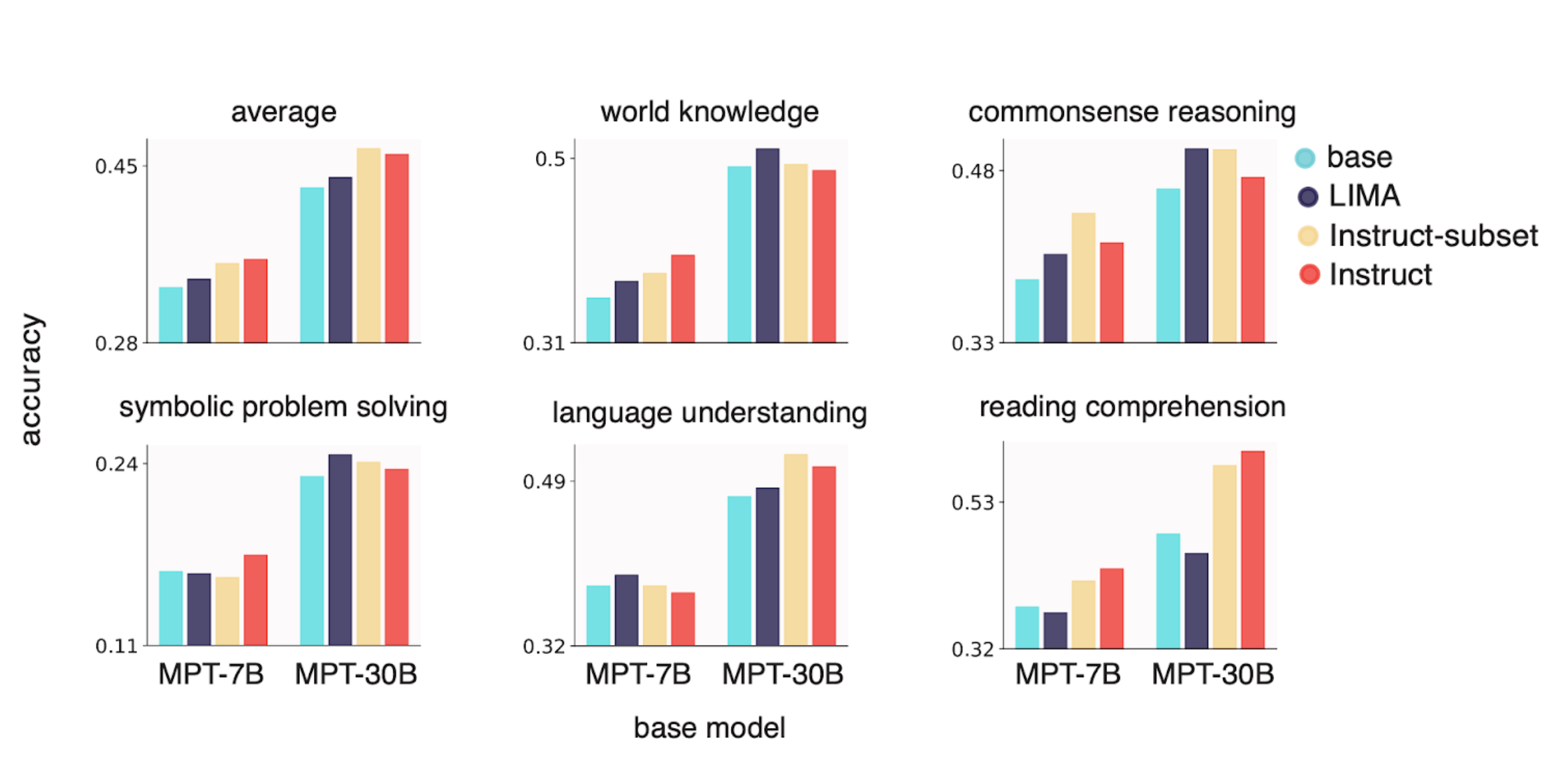

MPT-30B: Raising the bar for open-source foundation models

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

Computational Power and AI - AI Now Institute

Raising the Bar Winter 2023 Volume 6 Issue 1 by AccessLex Institute - Issuu

The History of Open-Source LLMs: Better Base Models (Part Two), by Cameron R. Wolfe, Ph.D.

PDF) A Review of Transformer Models

How to Use MosaicML MPT Large Language Model on Vultr Cloud GPU

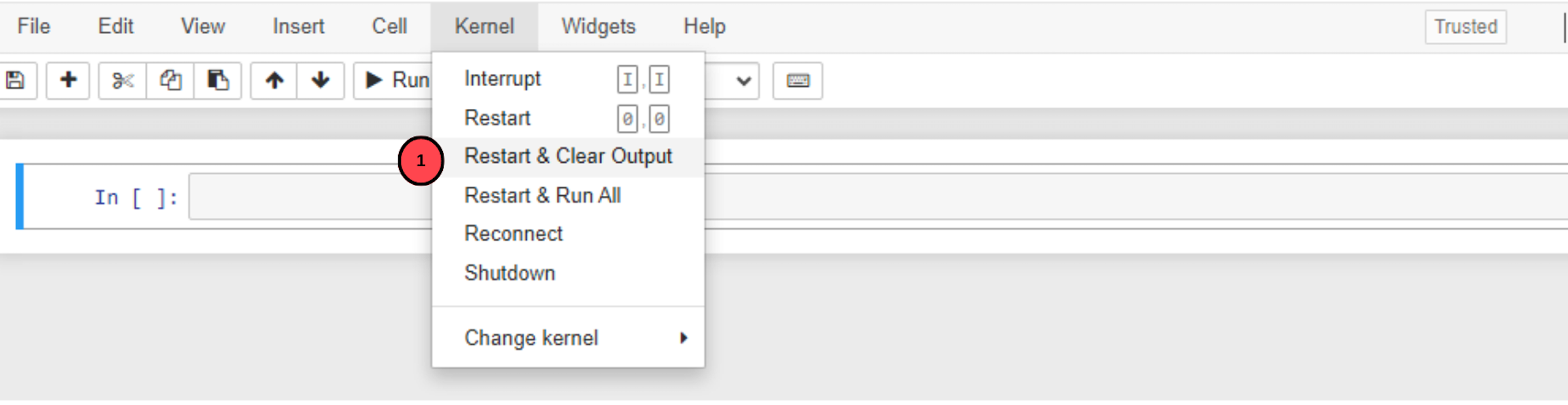

LIMIT: Less Is More for Instruction Tuning

12 Open Source LLMs to Watch

Benchmarking and Defending Against Indirect Prompt Injection Attacks on Large Language Models

maddes8cht/mosaicml-mpt-30b-instruct-gguf · Hugging Face

Is Mosaic's MPT-30B Ready For Our Commercial Use?, by Yeyu Huang

12 Open Source LLMs to Watch

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

The List of 11 Most Popular Open Source LLMs of 2023 Lakera – Protecting AI teams that disrupt the world.