DeepSpeed: Accelerating large-scale model inference and training

DeepSpeed (@MSFTDeepSpeed) / X

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software), by Liz Li

Elvin Aghammadzada on LinkedIn: #transformers #llms #deepspeed #tensorrt

2201.05596] DeepSpeed-MoE: Advancing Mixture-of-Experts Inference

Machine Learning and Inference Laboratory - Photos from Conferences, thomas mitchell machine learning

Toward INT8 Inference: Deploying Quantization-Aware Trained

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software), by Liz Li

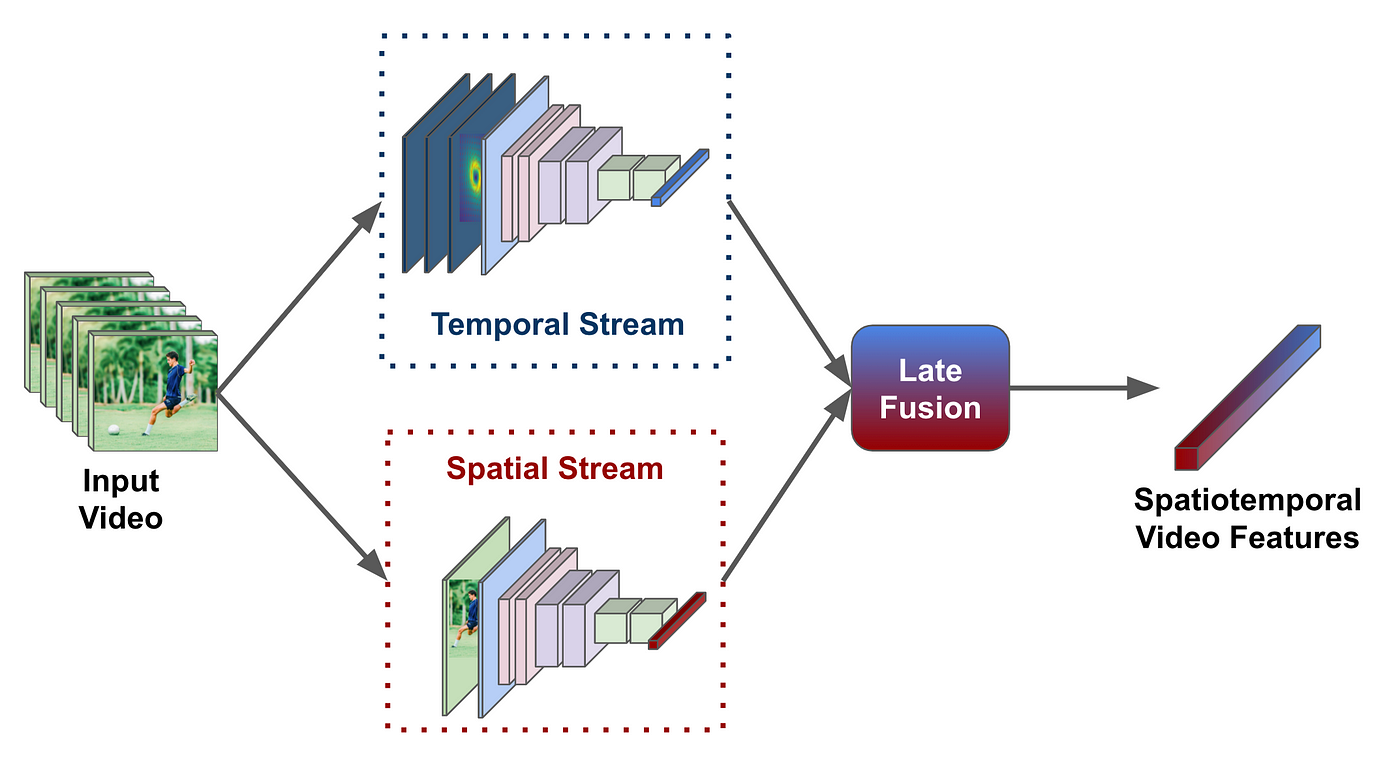

miro.medium.com/v2/resize:fit:1400/1*w5n8zDG6lloDt

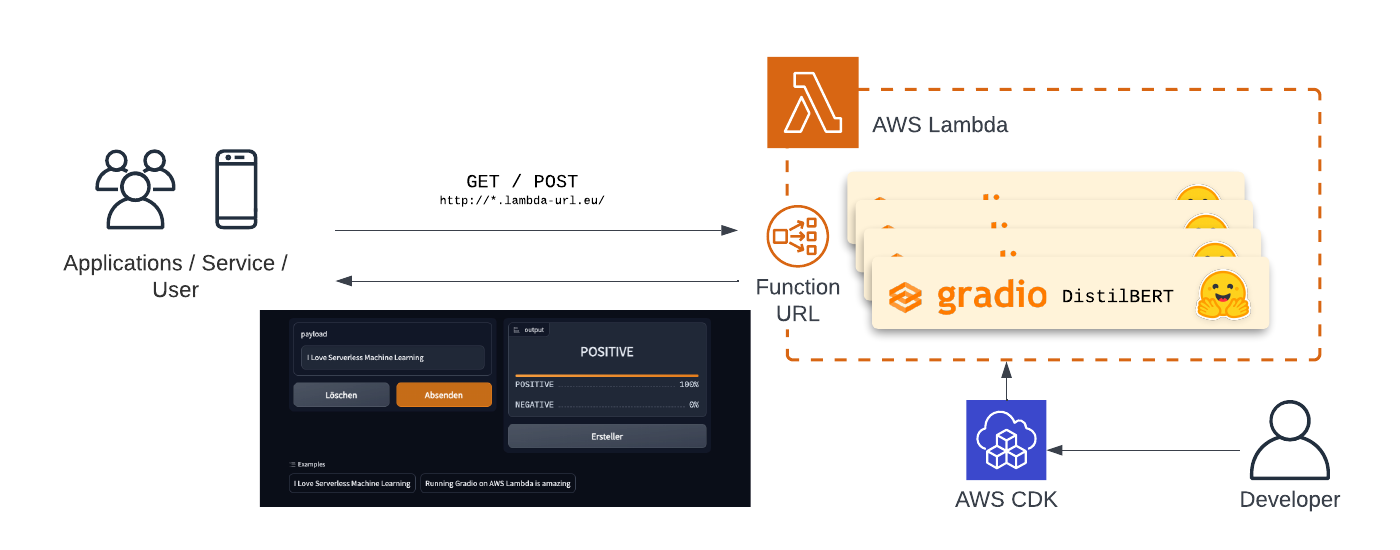

Accelerate Stable Diffusion inference with DeepSpeed-Inference on GPUs

Yuxiong He on LinkedIn: DeepSpeed powers 8x larger MoE model

Machine Learning and Inference Laboratory - Photos from Conferences, thomas mitchell machine learning

DeepSpeedExamples/applications/DeepSpeed-Chat/README.md at master

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software), by Liz Li

Introducing Audio Search by Length in Marketplace - Announcements - Developer Forum, library roblox music

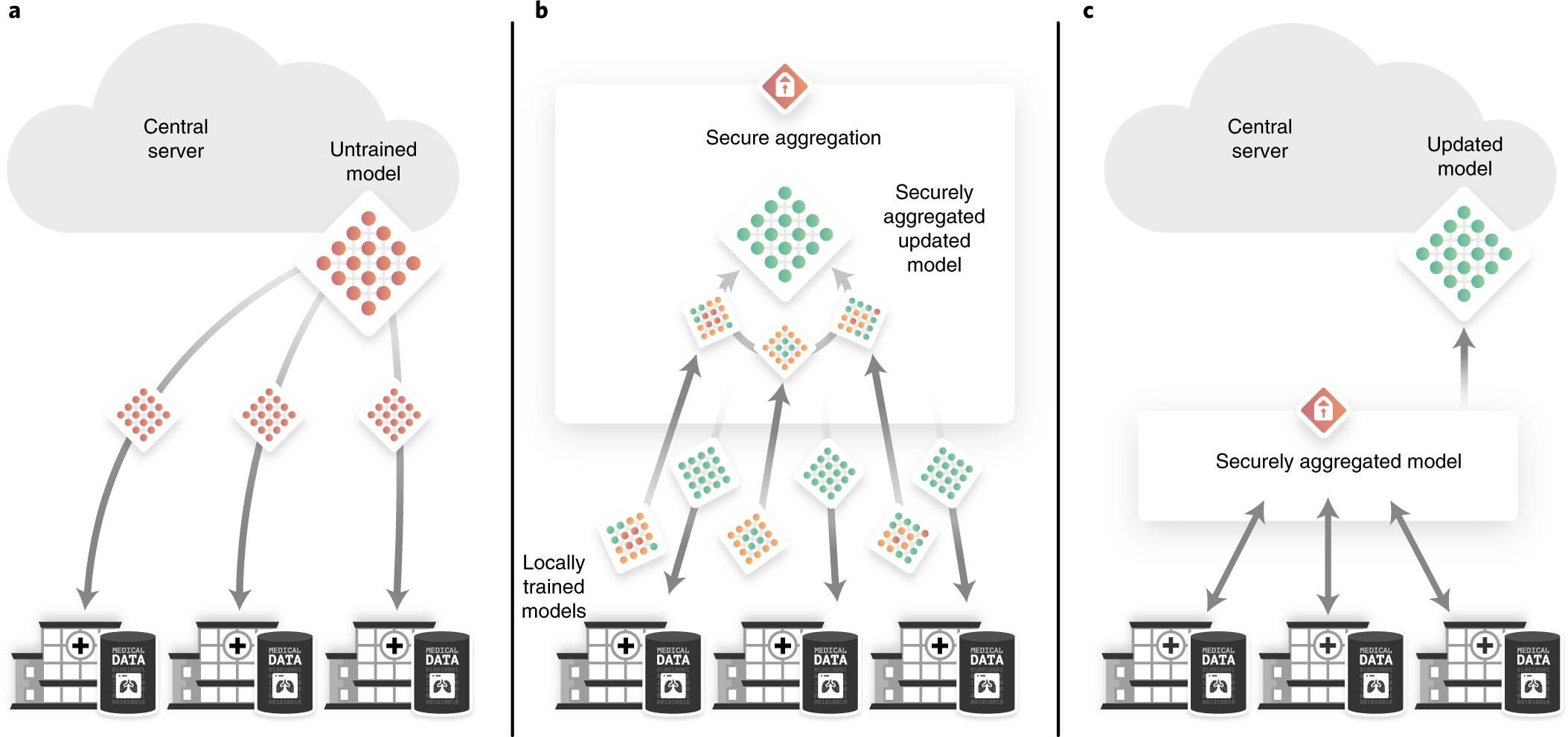

Optimization Strategies for Large-Scale DL Training Workloads