GitHub - bytedance/effective_transformer: Running BERT without Padding

Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

inference · GitHub Topics · GitHub

jalammar.github.io/notebooks/bert/A_Visual_Notebook_to_Using_BERT_for_the_First_Time.ipynb at master · jalammar/jalammar.github.io · GitHub

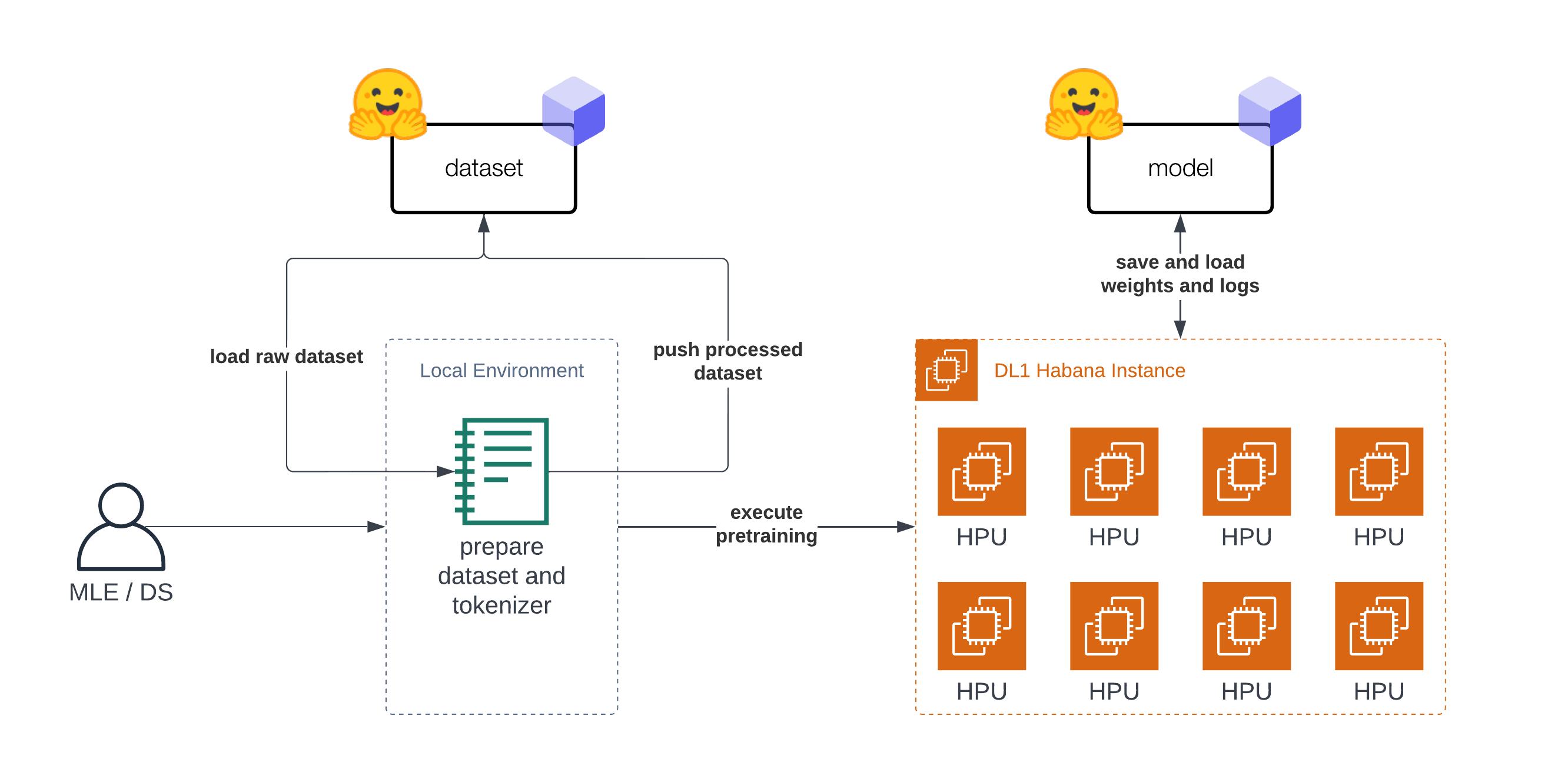

Pre-Training BERT with Hugging Face Transformers and Habana Gaudi

Non Packed Dataset Format? · Issue #637 · huggingface/trl · GitHub

process stuck at LineByLineTextDataset. training not starting · Issue #5944 · huggingface/transformers · GitHub

unable to load the downloaded BERT model offline in local machine . could not find config.json and Error no file named ['pytorch_model.bin', 'tf_model.h5', 'model.ckpt.index']

Tokenizers can not pad tensorized inputs · Issue #15447 · huggingface/transformers · GitHub

Loading fine_tuned BertModel fails due to prefix error · Issue #217 · huggingface/transformers · GitHub

GitHub - qbxlvnf11/BERT-series: Implementation of BERT-based models